Abstract

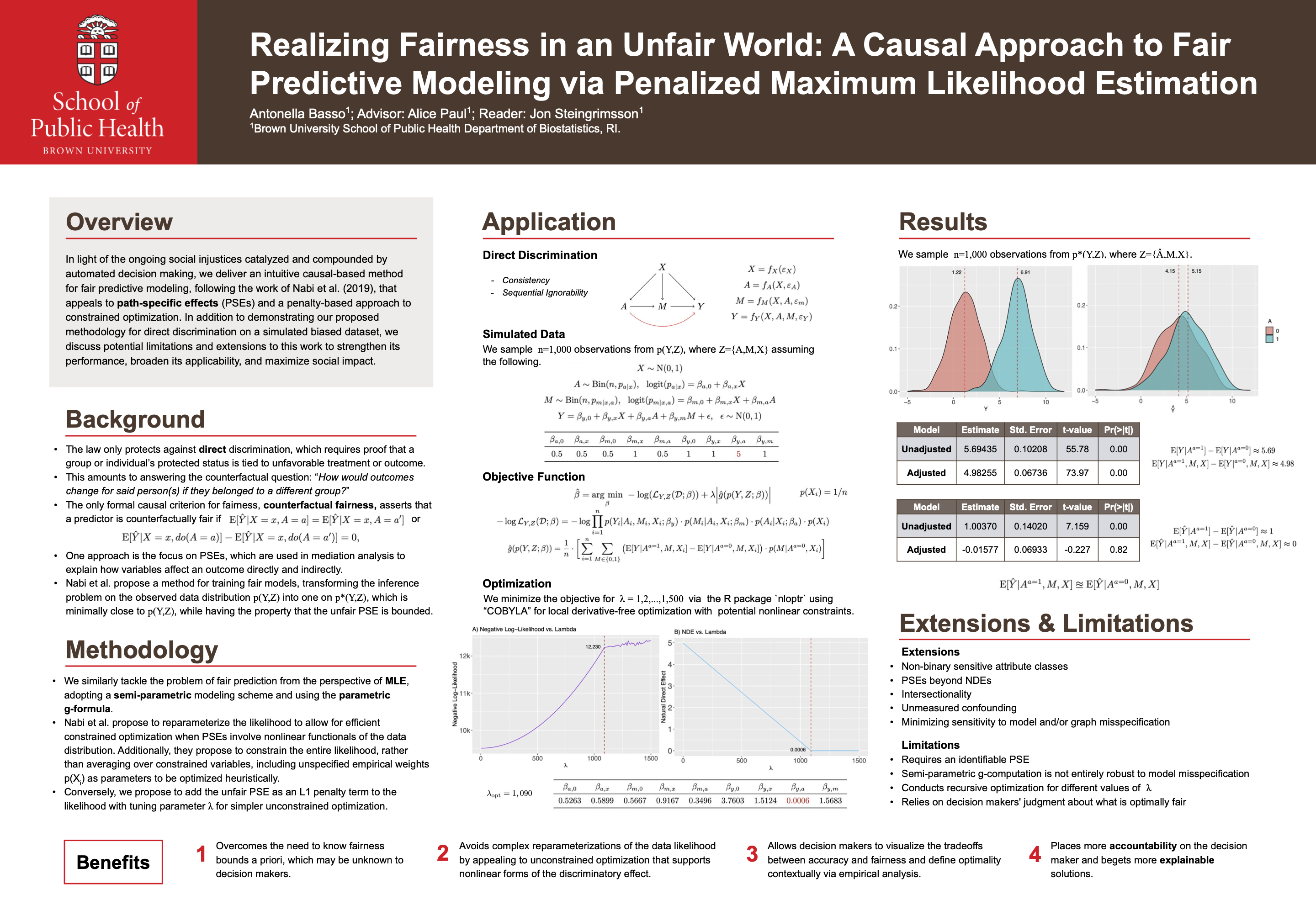

In light of the ongoing social injustices catalyzed and compounded by automated decision making, the primary aim of this research is to deliver an intuitive causal-based method for fair predictive modeling that appeals to path-specific effects (PSEs) and a penalty-based approach to maximum likelihood estimation. Specifically, we propose an adaptation to the work of Nabi, Malinsky, and Shpitser (2022), that simultaneously simplifies the (constrained) optimization problem, produces more explainable results, and holds decision makers accountable for the consequences of predictions. In addition to demonstrating the proposed methodology on a simulated biased dataset with direct discrimination, we discuss important limitations of this approach and provide recommendations for maximizing social impact. Further, we outline some possible extensions to this work that we believe can substantially strengthen its performance and broaden its applicability. More generally, we seek to show how grounding the fairness problem within a counterfactual reasoning framework allows us to formalize discrimination in a way that is most consistent with rational thought, as well as the moral and legal principles of equity and justice that govern society.

“Counterfactuals are the building blocks of moral behavior as well as scientific thought. The ability to reflect on one’s past actions and envision alternative scenarios is the basis of free will and social responsibility.” — Judea Pearl, “The Book of Why”

Read Report

Access R Code

Public Health Research Day

Public Health Research Day